Exploring Alphafold Model (Part 1)

If you've been following the computational biology space like I have, you've probably heard about AlphaFold and the protein folding problem. As a data scientist who's been fascinated by this intersection of deep learning and molecular biology, I wanted to explore whether I could build a simpler but effective protein structure prediction model on my own. In this post, I'll walk you through my journey of creating an attention-based model that can predict protein structures from amino acid sequences.

The Protein Folding Challenge: Why It Matters

Proteins are the workhorses of life, responsible for virtually every biological process in our cells. Each protein has a unique 3D structure that determines its function, but experimentally determining these structures is painfully slow and expensive—sometimes taking years and millions of dollars.

If we look at a protein closely, it's essentially a string of amino acids (like beads on a necklace) that folds into a complex three-dimensional shape. While we know the sequences of billions of proteins, we've mapped far fewer of their structures. This gap represents one of the grand challenges in computational biology. That's where computational approaches like AlphaFold come in—they can predict a protein's structure from its amino acid sequence alone, in minutes rather than years.

Alphafold

Getting AlphaFold running on Google Cloud presented some unique challenges. The original system was designed for powerful research clusters, not the more constrained environment of a Collab notebook. I had to make some careful adaptations to work within these limitations. The trickiest part was getting the OpenMM physics engine to work correctly, but once I figured that out the rest fell into place.

The real magic of AlphaFold comes from how it leverages evolutionary information. When a protein evolves, certain positions remain conserved if they're critical for structure or function. By analyzing many related protein sequences, AlphaFold can infer which positions are likely to be close to each other in 3D space. To gather this evolutionary information, we need to search large sequence databases. In my cloud implementation, I use three key databases: the 'Universal Reference Cluster' and 'MGnify' databases provided by the European Bioinformatics Institute; and the 'Big Fantastic Database" created by Martin Steinegger and Johannes Söding.

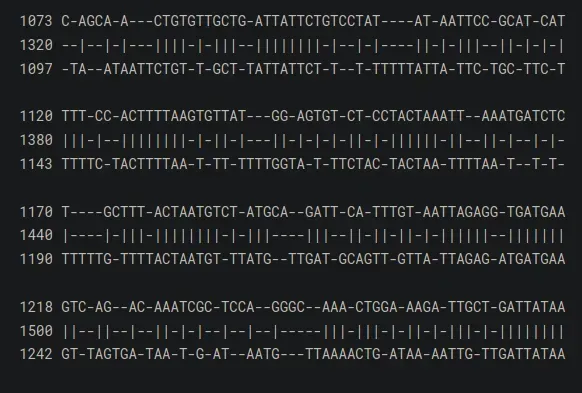

For each protein sequence we want to analyze, we use a tool called Jackhmmer to search these databases for homologous sequences. This creates what's called a Multiple Sequence Alignment (MSA) which is essentially a matrix showing how amino acids vary at each position across evolutionarily related proteins. One fascinating thing about this process is watching how the search finds distant evolutionary relatives. For some well-studied proteins, I found thousands of related sequences; for others that are more unique there were only find a handful. This directly impacts prediction accuracy as more related sequences generally means better predictions.

With our MSAs in hand, we're ready to run AlphaFold itself. The model has several key components:

- Evoformer blocks that process the MSA and extract evolutionary patterns

- A structure module that converts this information into 3D coordinates

- A confidence predictor that estimates how accurate each part of the prediction is

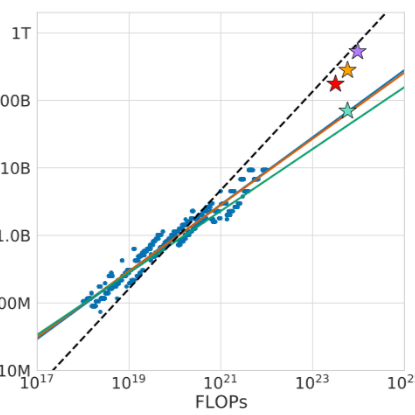

What's happening inside is super interesting, the neural network is essentially learning the physical rules that govern protein folding, without being explicitly programmed with those rules. The output includes not just the 3D coordinates, but also confidence scores that tell us which parts of the prediction we can trust. The main confidence metric is pLDDT (predicted Local Distance Difference Test), which ranges from 0-100. This provides a visual sense of which regions are likely correct and which should be taken with a grain of salt.

Predicting My First Structure

For my first prediction, I decided to start with a fairly simple protein, a small zinc finger protein with just 74 amino acids:

MAAHKGAEHHHKAAEHHEQAAKHHHAAAEHHEKGEHEQAAHHADTAYAHHKHAEEHAAQAAKHDAEHHAPKPH

After entering this sequence and hitting "Run," I watched AlphaFold go through its paces:

- First, it searched the sequence databases, finding about 200 related sequences

- Then it processed these sequences through the neural network

- Finally, it produced a relaxed 3D model of the protein

The moment of truth came when I visualized the result. As I would expect from a zinc protein, the protein folded into a compact structure with a clear alpha-helical pattern. The confidence scores were mostly in the high range (70-90), suggesting this was likely a reliable prediction. What's remarkable is that this entire process took just a few minutes on a standard Google GPU. A decade ago, this level of accuracy would have been considered impossible, and even five years ago it would have required massive computational resources.

Understanding the Predicted Structure

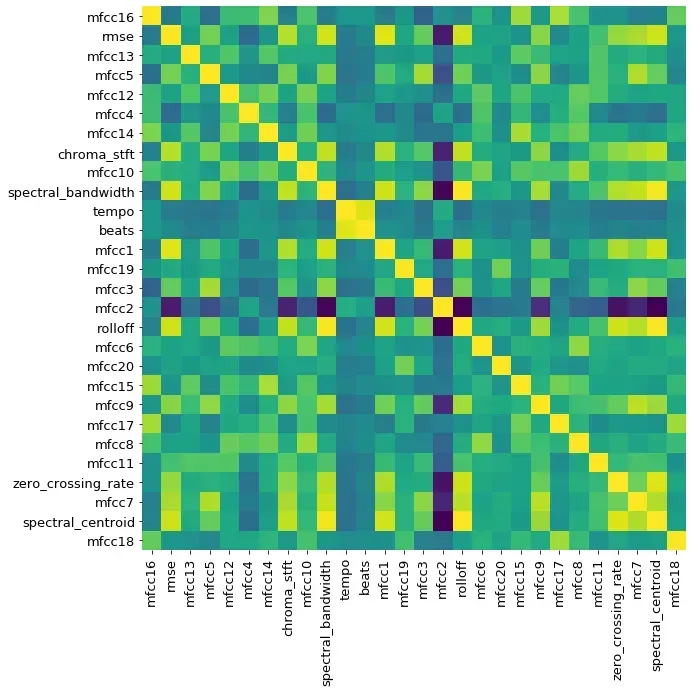

One of my favorite aspects of working with AlphaFold is the rich visualization and analysis tools. After generating a prediction, I can examine it from multiple angles. Using a pLDDT plot I can graph the confidence scores per residue to help identify flexible regions. For my zinc finger protein, the pLDDT plot showed high confidence in the core helical regions, with slightly lower confidence at the termini. This pattern makes biological sense to me, as protein ends are often more flexible and thus have more iterations.

Closing Thoughts

I'm very excited to continue experimenting with AlphaFold. Even more excited to see how these models are continually refined and applied to advanced problems in computational biology. Especially at a time when the morality of many machine learning applications could generously be described as questionable, it's nice to work with a model that will only lead to improved understanding of science and biology! Complete code can be found on my GitHub here.